Using a multimeter that provides the right measurements is important. Knowing what those measurements mean is even more important. Accuracy and precision ensures the measurements you take will be useful; higher precision enables easier repeatability and higher accuracy means the your readings will be closer to perfect.

What is the Accuracy of a Digital Multimeter?

A digital multimeter's accuracy depends on several factors, so there is no single, overarching answers.

Accuracy refers to the largest allowable error that occurs under specific operating conditions. It is expressed as a percentage and indicates how close the measurement displayed is to the actual (standard) value of the signal measured. Accuracy requires a comparison to an accepted industry standard.

The accuracy of a specific digital multimeter is important, depending on the application. For example, most AC power line voltages can vary ±5% or more. An example of this variation is a voltage measurement taken at a standard 115 V AC receptacle. If a digital multimeter is only used to check if a receptacle is energized, a digital multimeter with a ±3% measurement accuracy is appropriate.

Some applications, such as calibration of automotive, medical aviation or specialized industrial equipment, may require higher accuracy. A reading of 100.0 V on a digital multimeter with an accuracy of ±2% can range from 98.0 V to 102.0 V. This may be fine for some applications, but unacceptable for more sensitive electronic equipment.

Accuracy may also include a specified amount of digits (counts) added to the basic accuracy rating. For example, an accuracy of ±(2%+2) means that a reading of 100.0 V on the multimeter can be from 97.8 V to 102.2 V. Use of a digital multimeter with higher accuracy allows for a great number of applications.

Basic dc accuracy of Fluke handheld digital multimeters ranges from 0.5% to 0.025%.

How Precise is a Digital Multimeter?

Precision refers to a digital multimeter’s ability to provide the same measurement repeatedly.

A common example used to explain precision is the arrangement of holes on a shooting-range target. This example presumes a rifle is aimed at the target’s bullseye and shot from the same position each time.

If the holes are tightly packed but outside the bullseye, the rifle (or shooter) can be considered precise but not accurate.

If the holes are tightly packed within the bullseye, the rifle is both accurate and precise. If the holes are spread randomly all over the target, it is neither accurate nor precise (nor repeatable).

In some circumstances, precision, or repeatability, is more important than accuracy. If measurements are repeatable, it’s possible to determine an error pattern and compensate for it.

What Does Resolution Measurement Mean?

Resolution is the smallest increment a tool can detect and display.

For a nonelectrical example, consider two rulers. One marked with 1/16-inch hatch marks offers greater resolution than one marked with quarter-inch hatch marks.

Imagine a simple test of a 1.5 V household battery. If a digital multimeter has a resolution of 1 mV on the 3 V range, it is possible to see a change of 1 mV while reading the voltage. The user could see changes as small as one one-thousandth of a volt, or 0.001 at the 3 V range.

Resolution may be listed in a meter's specifications as maximum resolution, which is the smallest value that can be discerned on the meter's lowest range setting.

For example, a maximum resolution of 100 mV (0.1 V) means that when the multimeter's range is set to measure the highest possible voltage, the voltage will be displayed to the nearest tenth of a volt.

Resolution is improved by reducing the digital multimeter's range setting as long as the measurement is within the set range.

What is the Range of a Multimeter?

Digital multimeter range and resolution are related and are sometimes specified in a digital multimeter’s specifications.

Many multimeters offer an autorange function that automatically selects the appropriate range for the magnitude of the measurement being made. This provides both a meaningful reading and the best resolution of a measurement.

If the measurement is higher than the set range, the multimeter will display OL (overload). The most accurate measurement is obtained at the lowest possible range setting without overloading the multimeter.

| Range and Resolution | |

|---|---|

| Range | Resolution |

| 300.0 mV | 0.1 mV (0.0001 V) |

| 3.000 V | 1 mV (0.001 V) |

| 30.00 V | 10 mV (0.01 V) |

| 300.0 V | 100 mV (0.1 V) |

| 1000 V | 1000 mV (1 V) |

What is the Difference Between Counts and Digits?

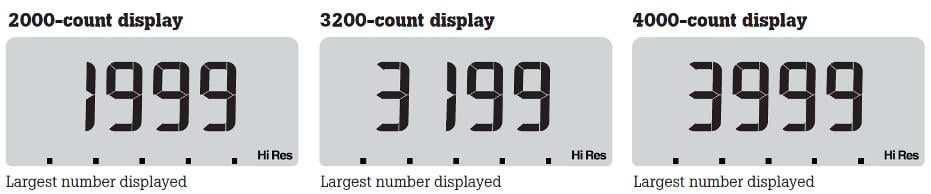

Counts and digits are terms used to describe a digital multimeter's resolution. Today it is more common to classify digital multimeters by the total counts than by digits.

Counts: A digital multimeter resolution is also specified in counts. Higher counts provide better resolution for certain measurements. For example, a 1999-count multimeter cannot measure down to a tenth of a volt if measuring 200 V or more. Fluke offers 3½-digit digital multimeters with counts of up to 6000 (meaning a max of 5999 on the meter's display) and 4½-digit meters with counts of either 20000 or 50000.

Digits: The Fluke product line includes 3½- and 4½-digit digital multimeters. A 3½-digit digital multimeter, for example, can display three full digits and a half digit. The three full digits display a number from 0 to 9. The half digit, considered the most significant digit, displays a 1 or remains blank. A 4½-digit digital multimeter can display four full digits and a half, meaning it has a higher resolution than a 3½-digit meter.